Machine Learning in the Browser with Deep Neural Networks

A Visual Introduction

Oliver Zeigermann / @DJCordhose

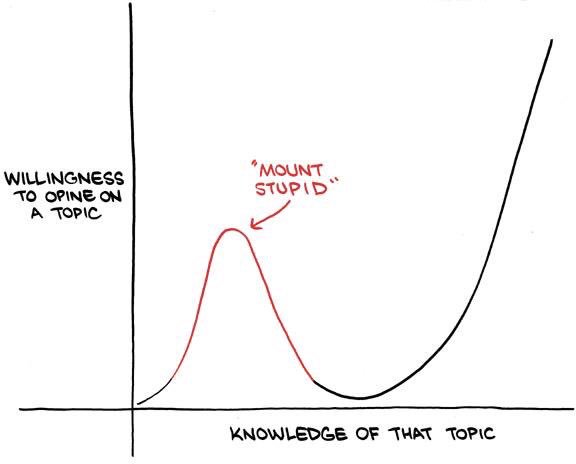

Where I am at mount stupid

What is machine learning?

science of getting computers to act without being explicitly programmed

Example: Tell an organge from an apple

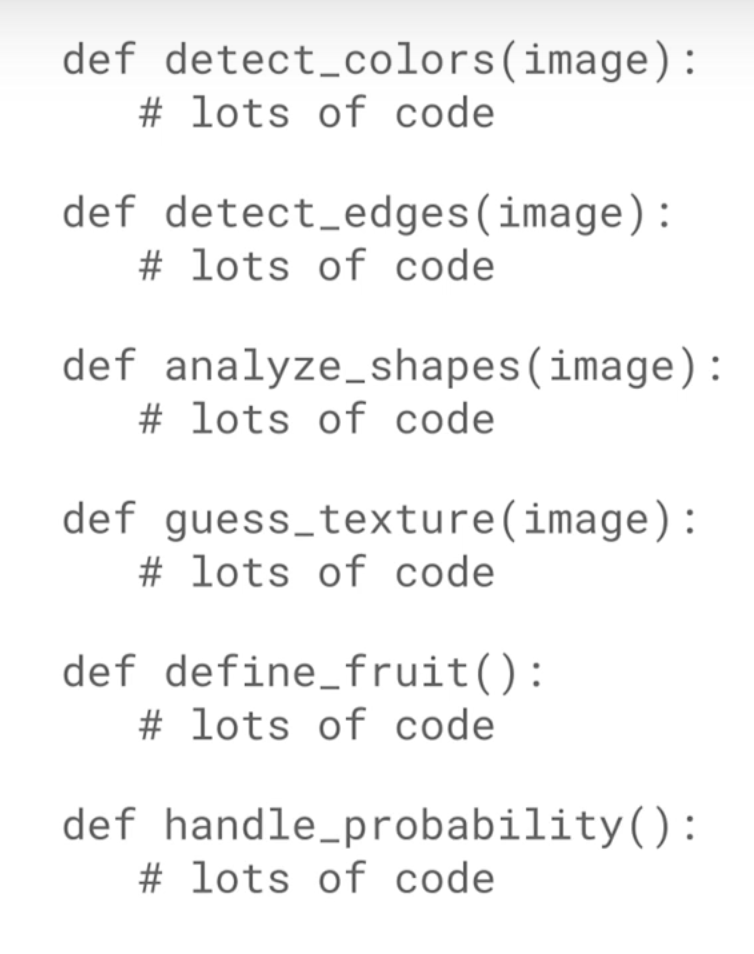

Programmatic Solution

Checking the color

What about BW?

Banana?

Just too many rules to write by hand

And you probably forgot one

Rather let the machine learn all the rules for you

By learning from examples

Applications of machine learning?

- self-driving cars

- speech recognition

- fraud detection

- recommendations

- playing Go

Plan for today

Part I: Introduction to Deep Neural Networks

Part II: Doing the magic using convnet.js and Tensorflow Playground

Part I: Introduction to Deep Neural Networks

Hottest Shit in the area of supervised machine learning

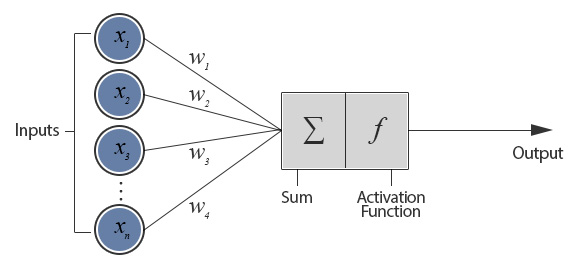

The perceptron - where it all begins

http://www.theprojectspot.com/tutorial-post/introduction-to-artificial-neural-networks-part-1/7

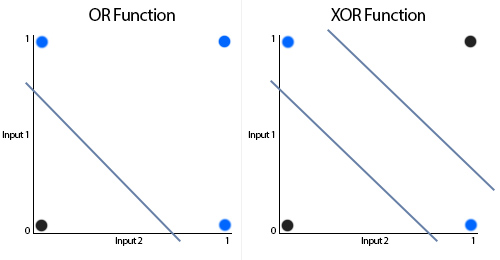

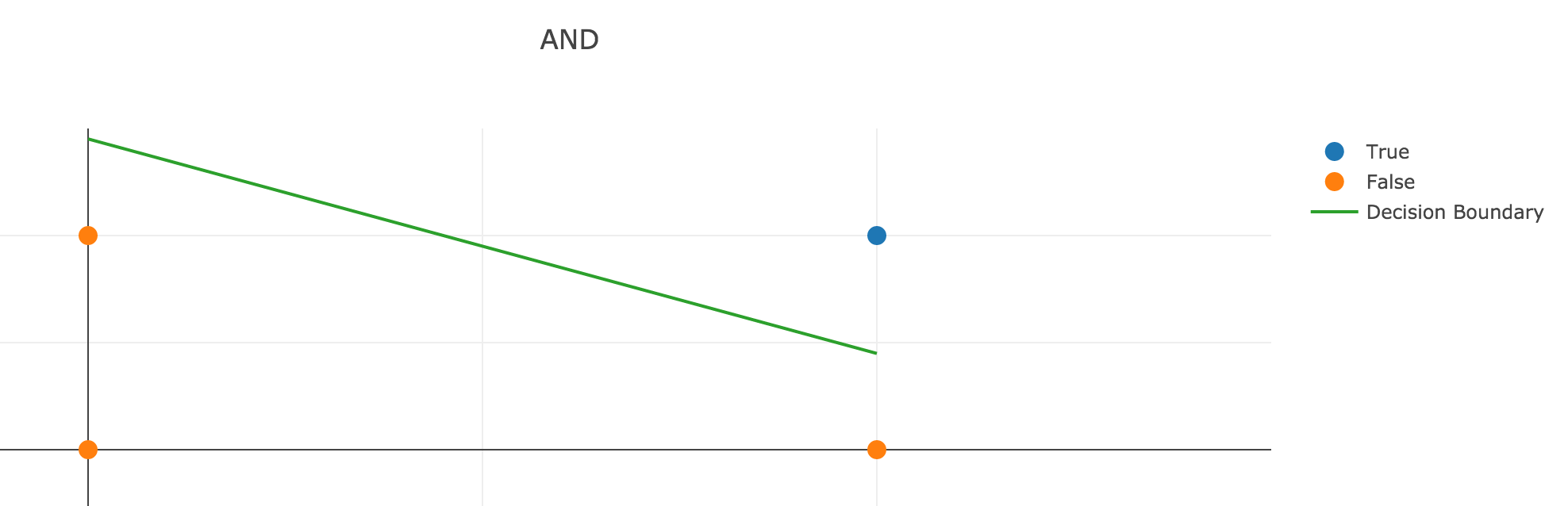

What can a perceptron do?

- output separates plane into two regions using a line

- such regions are called linearly separable

- can emulate most logic functions (NOT, AND, OR, NAND)

- can be trained by adjusting weights of inputs based on error in output

percepton training visualization (initial version provided as a courtesy of Jed Borovik)

XOR - What a perceptron can NOT do

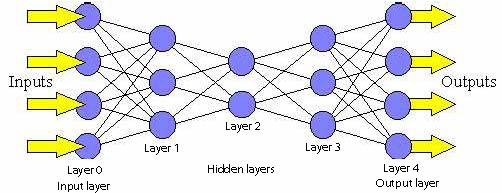

Feedforward Neural Networks - putting perceptrons together

Backpropagation

- Errors in output can be used for training

- Go through the network in reverse order

- Going from output layer to input layer

- Error will correct weights of perceptrons

- The more a perceptron has contributed to an error, the bigger the correction

Deep Neural Networks

- Deep Neural Networks have more than one hidden layer

- they can approximate any known function

- probably best predictive power among all machine learning strategies

- Convolutional Deep Neural Networks are a variant specialized in computer vision

Part II: Doing the magic using convnet.js and Tensorflow Playground

Why JavaScript for Machine Learning?

- Python and R are predominant

- Have a large and mature set of libs

- Are reasonably fast

- Using binding to C/C++ or Fortran

- JavaScript has benefits, though

- might be the language you are most comfortable with

- might be the only language around (because all you have is a browser)

- zero installation, easy to get started

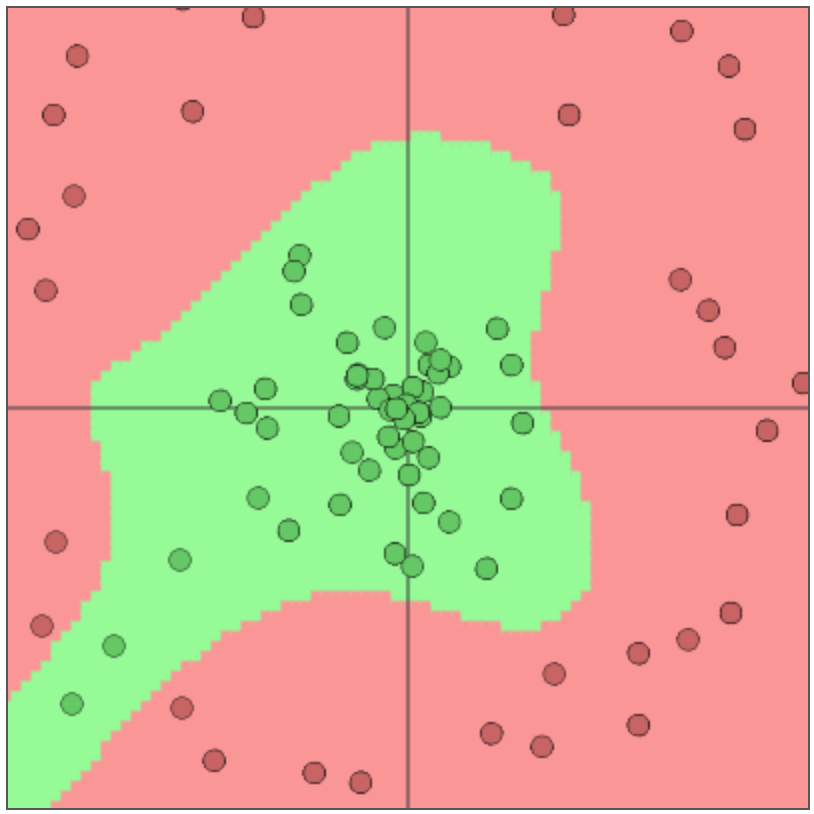

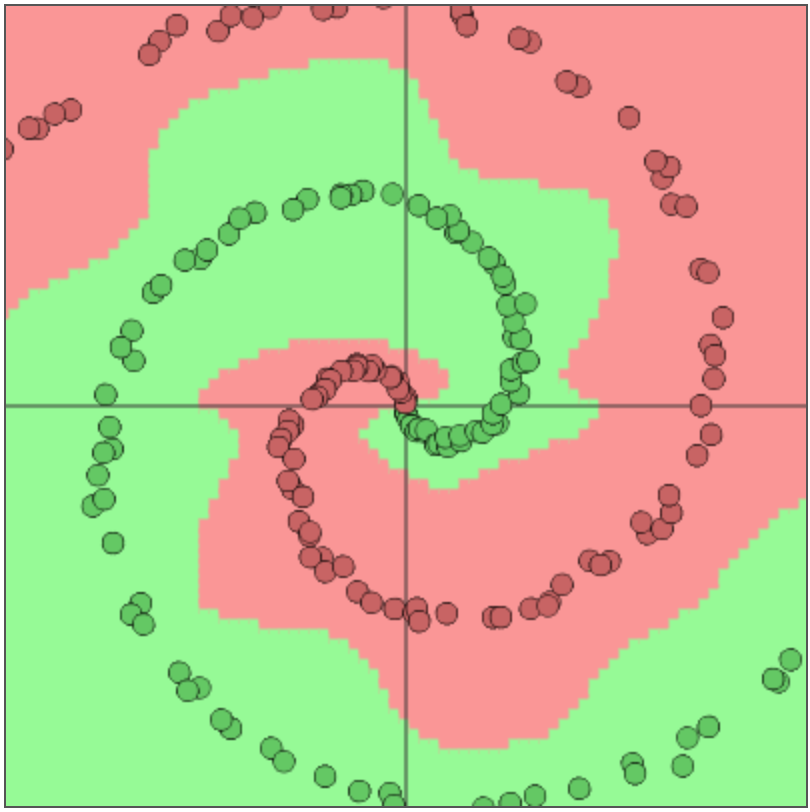

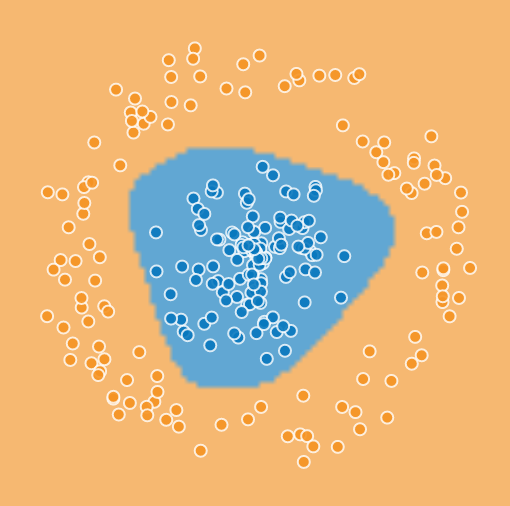

ConvNetJS: Example

Interactive classifier using deep neural network

Supervised vs Unsupervised Learning

- supervised learning: train a system by giving input and output

- unsupervised learning: find previously unknown patterns in data, e.g.

- clustering data using K-means or

- finding correlations to reduce dimensions using PCA

Code for classifier

layer_defs = [

{type:'input', out_sx:1, out_sy:1, out_depth:2},

{type:'fc', num_neurons:6, activation: 'tanh'},

{type:'fc', num_neurons:2, activation: 'tanh'},

{type:'softmax', num_classes:2}];

net = new convnetjs.Net();

net.makeLayers(layer_defs);

trainer = new convnetjs.Trainer(net);

Classifier Example - Prediction

var point = new convnetjs.Vol(1,1,2); // needs to match input layer

point.w = [3.0, 4.0];

var prediction = net.forward(point);

// probability of classes in .w

if(prediction.w[0] > prediction.w[1]) // red;

else // green;

Predictions will also be painted as background colors

Classifier Example - Training

var data = [[-0.4326, 1.1909], [3.0, 4.0], [1.8133, 1.0139 ]];

var labels = [1, 1, 0];

var N = labels.length;

var avloss = 0.0;

for (var iter=0; iter < 20; iter++) {

for (var ix=0; ix < N; ix++) {

var point = new convnetjs.Vol(1,1,2);

var label = labels[ix];

point.w = data[ix];

var stats = trainer.train(point, label);

avloss += stats.loss;

}

}

// make this as small as possible

avloss /= N*iters;

Trainer turns learning into a numerical optimisation problem of weight parameters

Convnetjs uses stochastic gradient descent by default

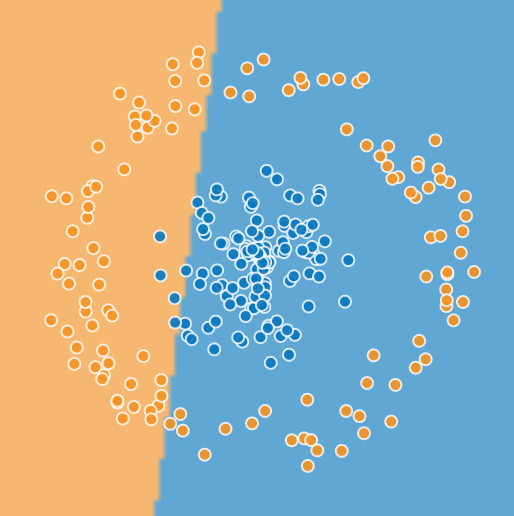

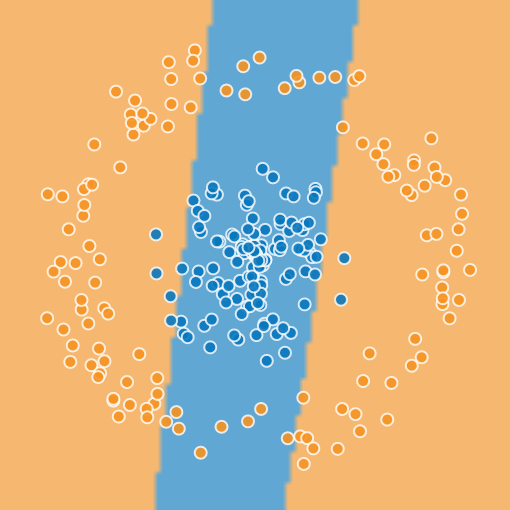

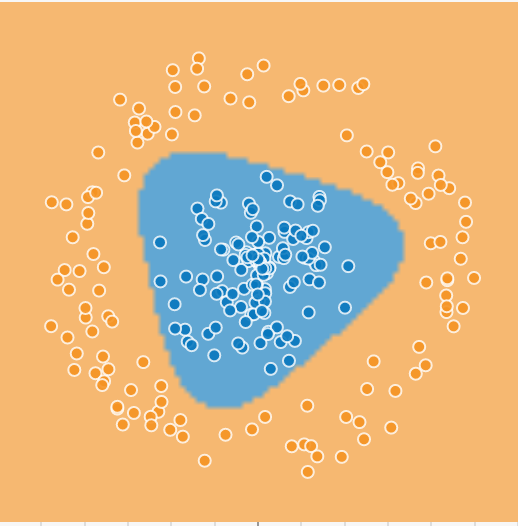

Separability with Tensorflow Playground

Just one hidden layer, number of perceptrons variable

1, 2, 3, 5

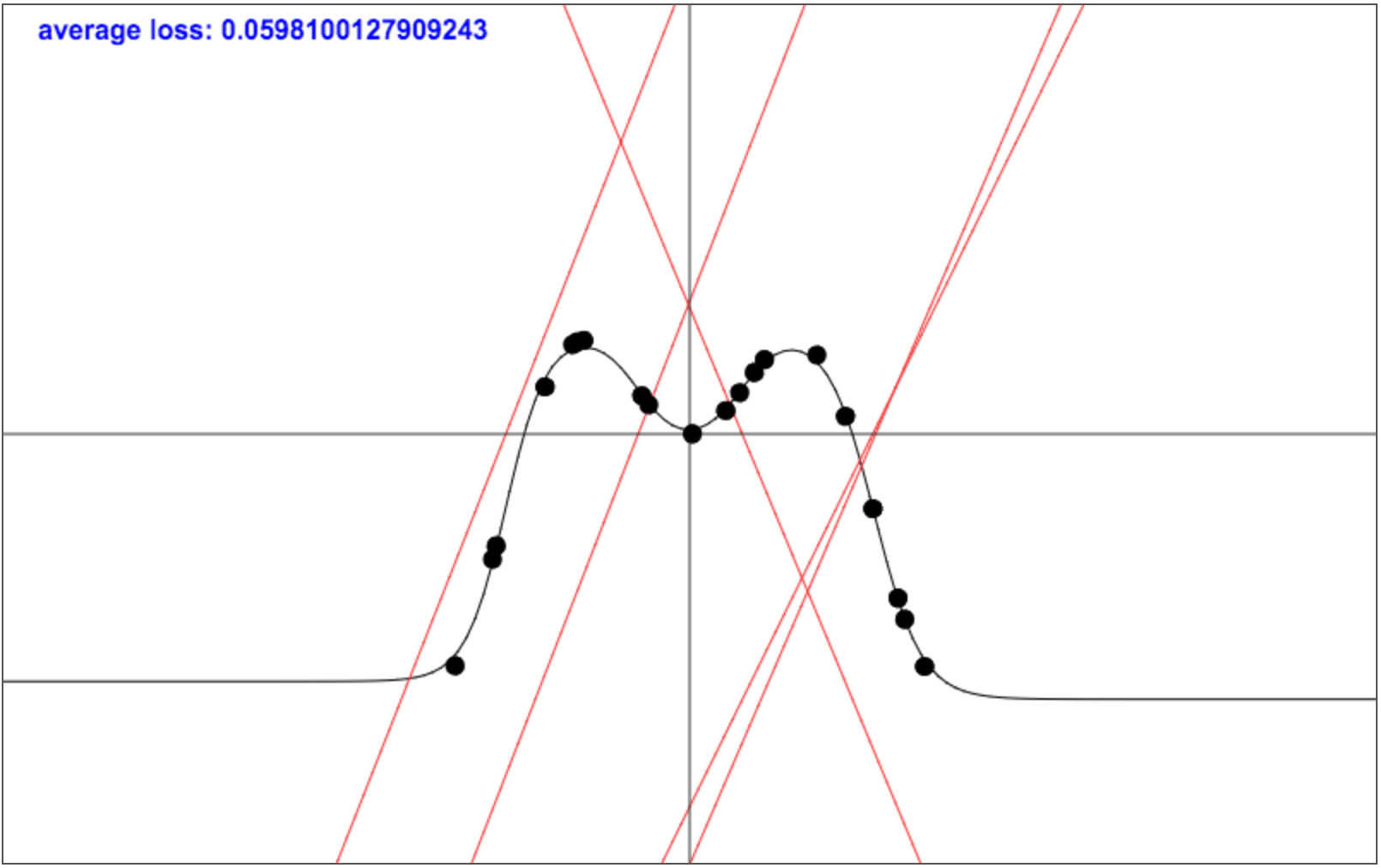

Regression

Does not classify, but tries to find a coninuous function that goes through all data points

Regression Example - Code

Quick Quiz: How many neurons in hidden layer?

layer_defs = [

{type:'input', out_sx:1, out_sy:1, out_depth:1},

{type:'fc', num_neurons:5, activation:'sigmoid'},

{type:'regression', num_neurons:1}];

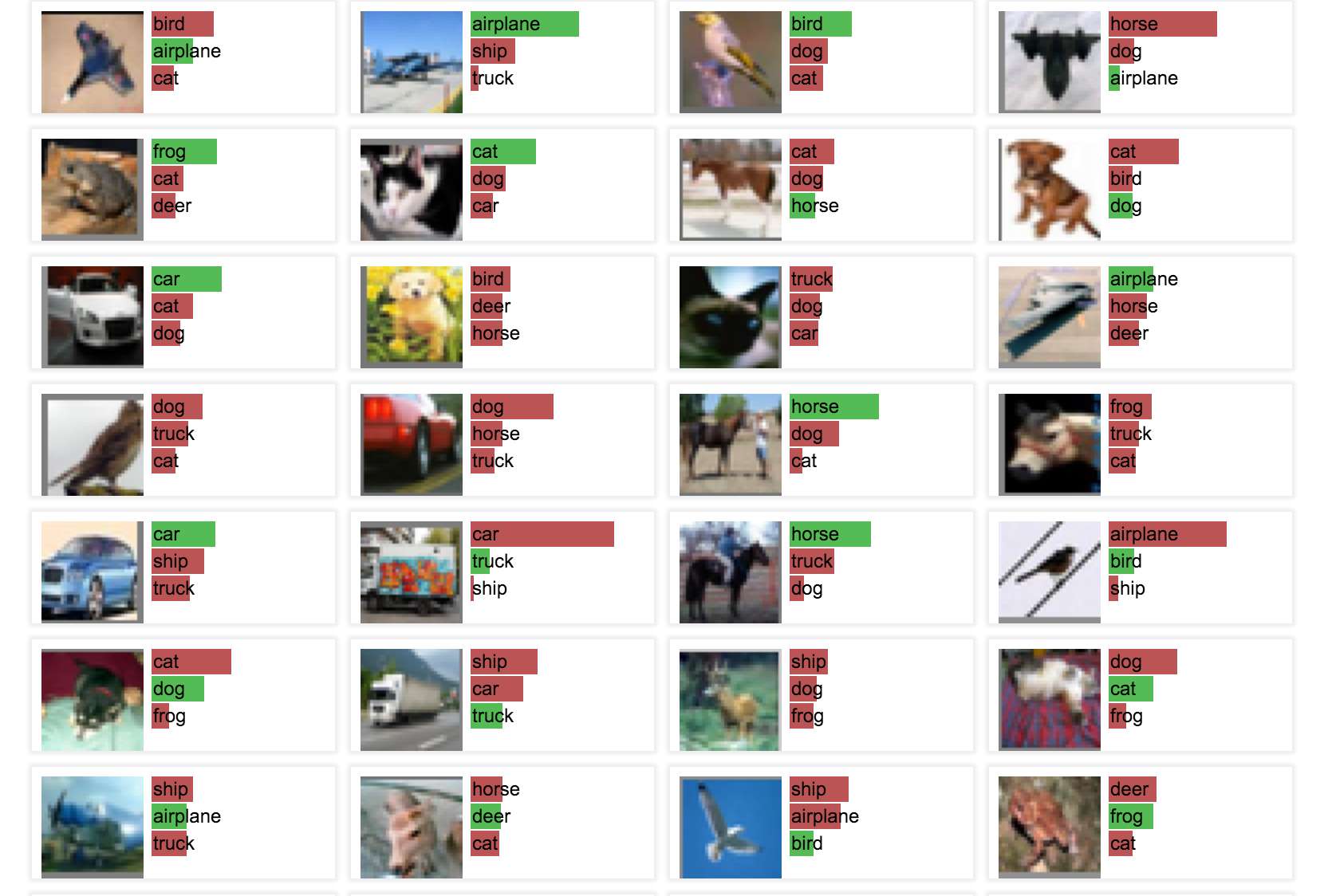

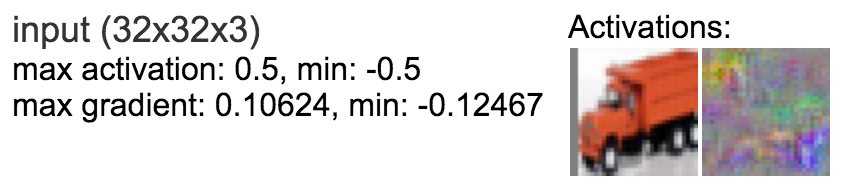

CIFAR-10 with Convolutional Deep Neural Networks

uses convolutional hidden layers for filtering

The CIFAR-10 dataset

Tiny images in 10 classes, 6000 per class in training set

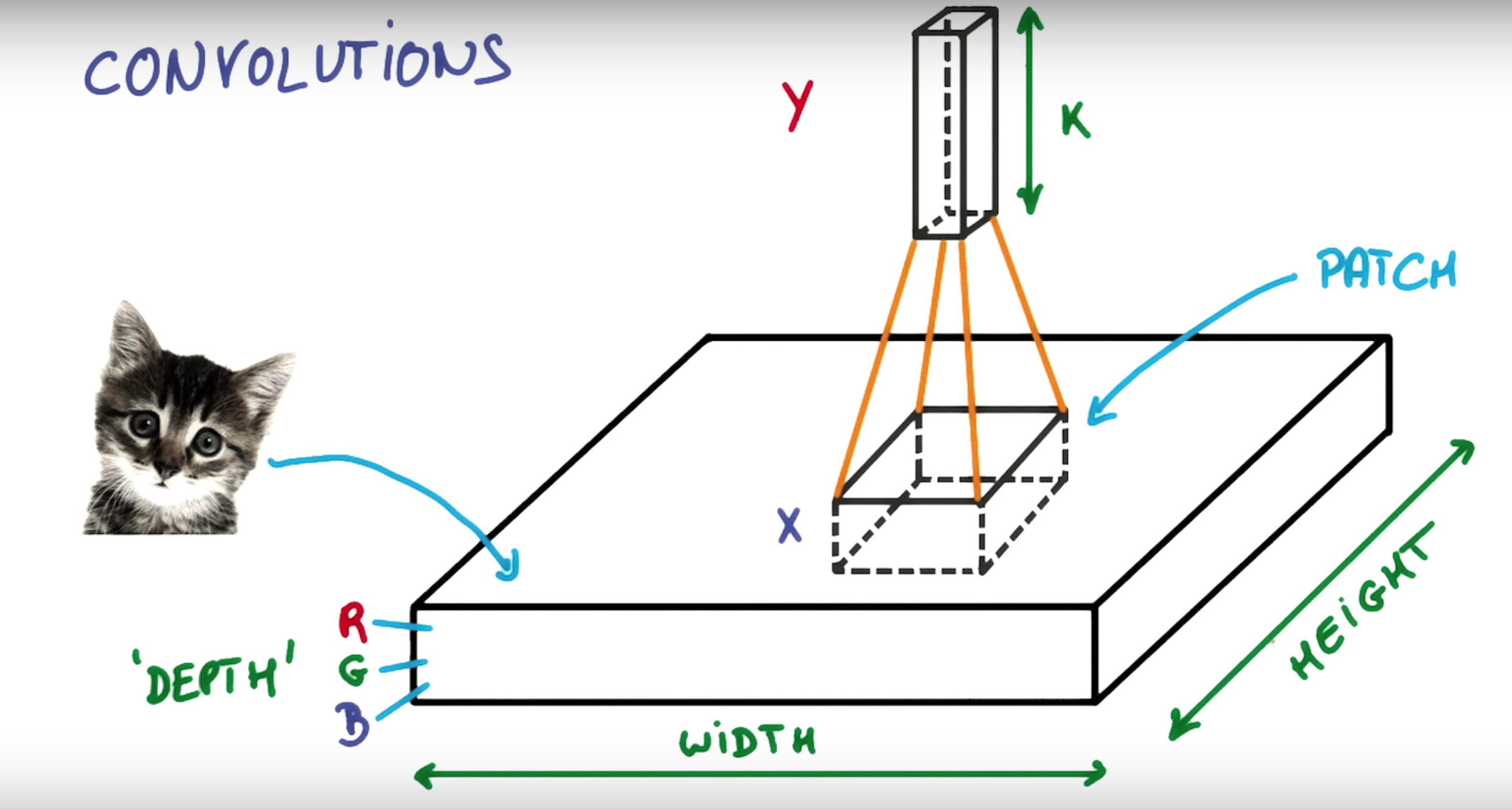

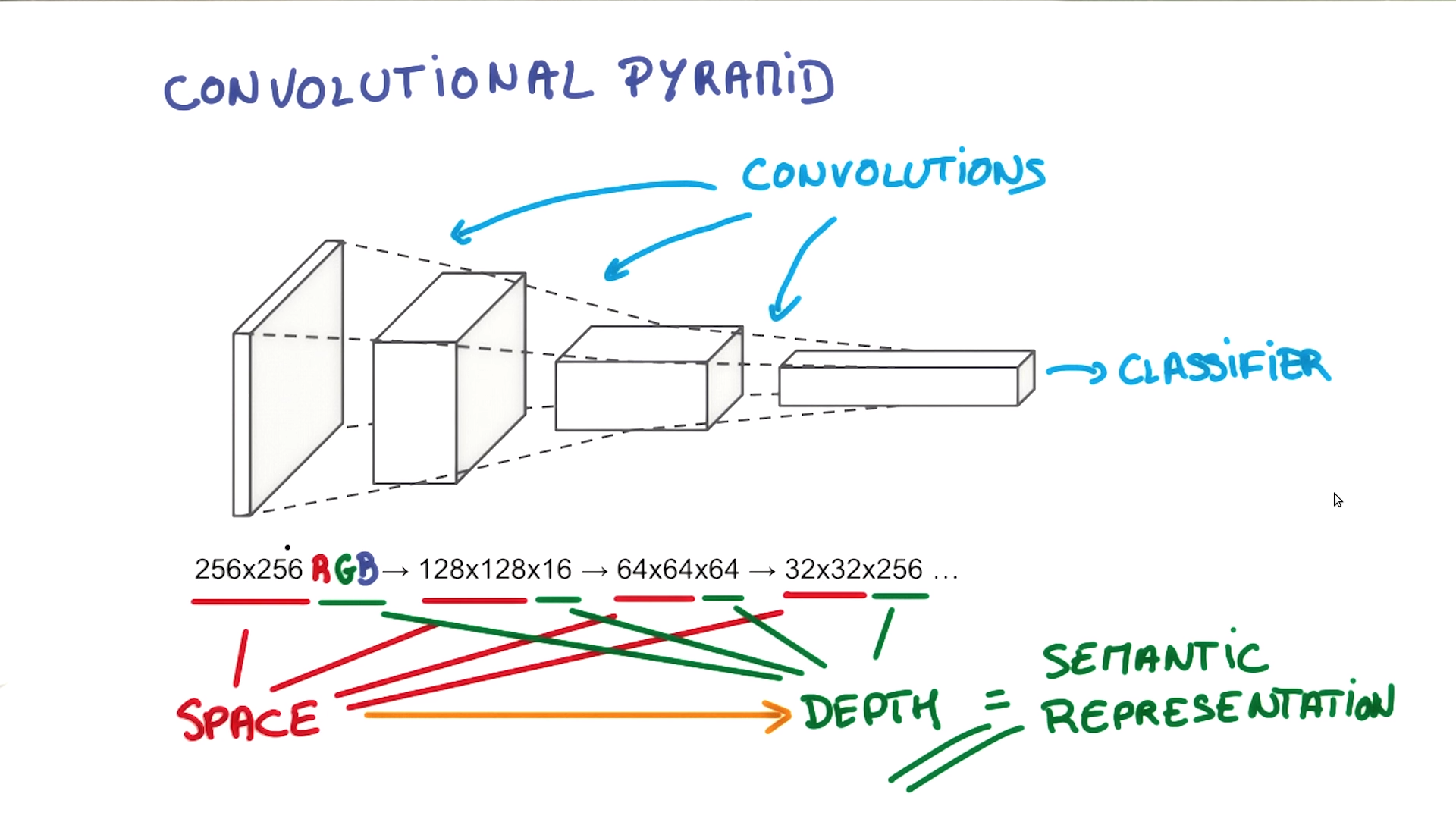

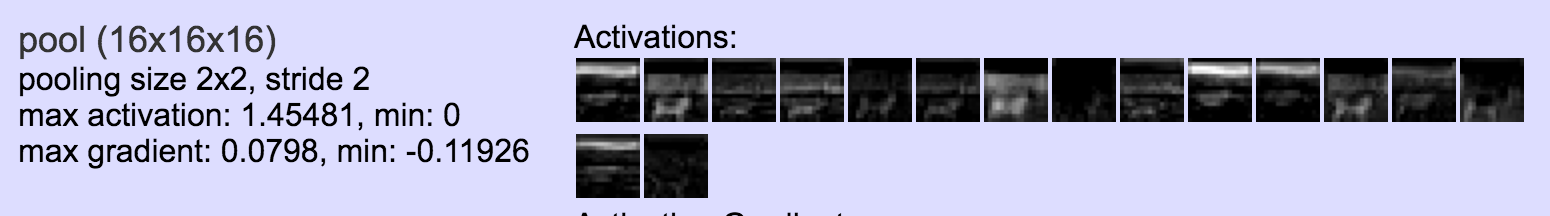

The Convolutional Pyramid

Udacity Course 730, Deep Learning (L3 Convolutional Neural Networks > Convolutional Networks)

CIFAR-10 Example - Code

layer_defs = [

{type:'input', out_sx:32, out_sy:32, out_depth:3},

{type:'conv', sx:5, filters:16, stride:1, pad:2, activation:'relu'},

{type:'pool', sx:2, stride:2}),

{type:'conv', sx:5, filters:20, stride:1, pad:2, activation:'relu'}.

{type:'pool', sx:2, stride:2},

{type:'conv', sx:5, filters:20, stride:1, pad:2, activation:'relu'},

{type:'pool', sx:2, stride:2},

{type:'softmax', num_classes:10}];

Input: 32x32 RGB images

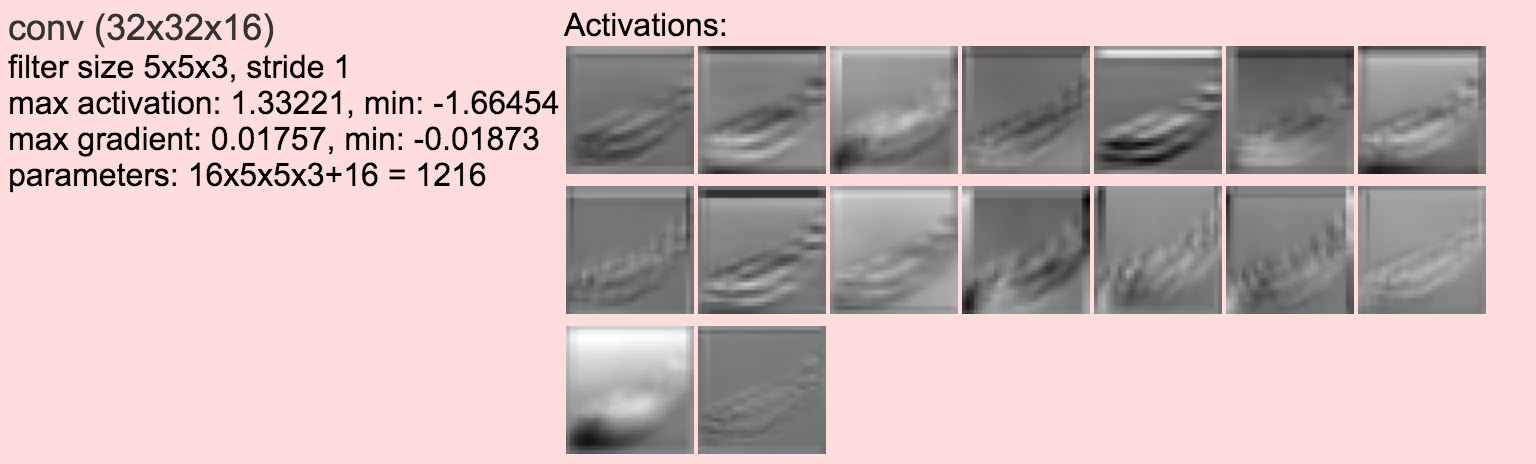

Convolutions

Convolution (Filtering) step #1: 16 32x32 images of filtered data

// 16 5x5 filters will be convolved with the input

// output again 32x32 as stride (step width) is 1

// 2 pixels on each edge will be padded with 0 matching 5x5 pixel filter

{type:'conv', sx:5, filters:16, stride:1, pad:2, activation:'relu'}

convolutional layers remove noise, add semantics

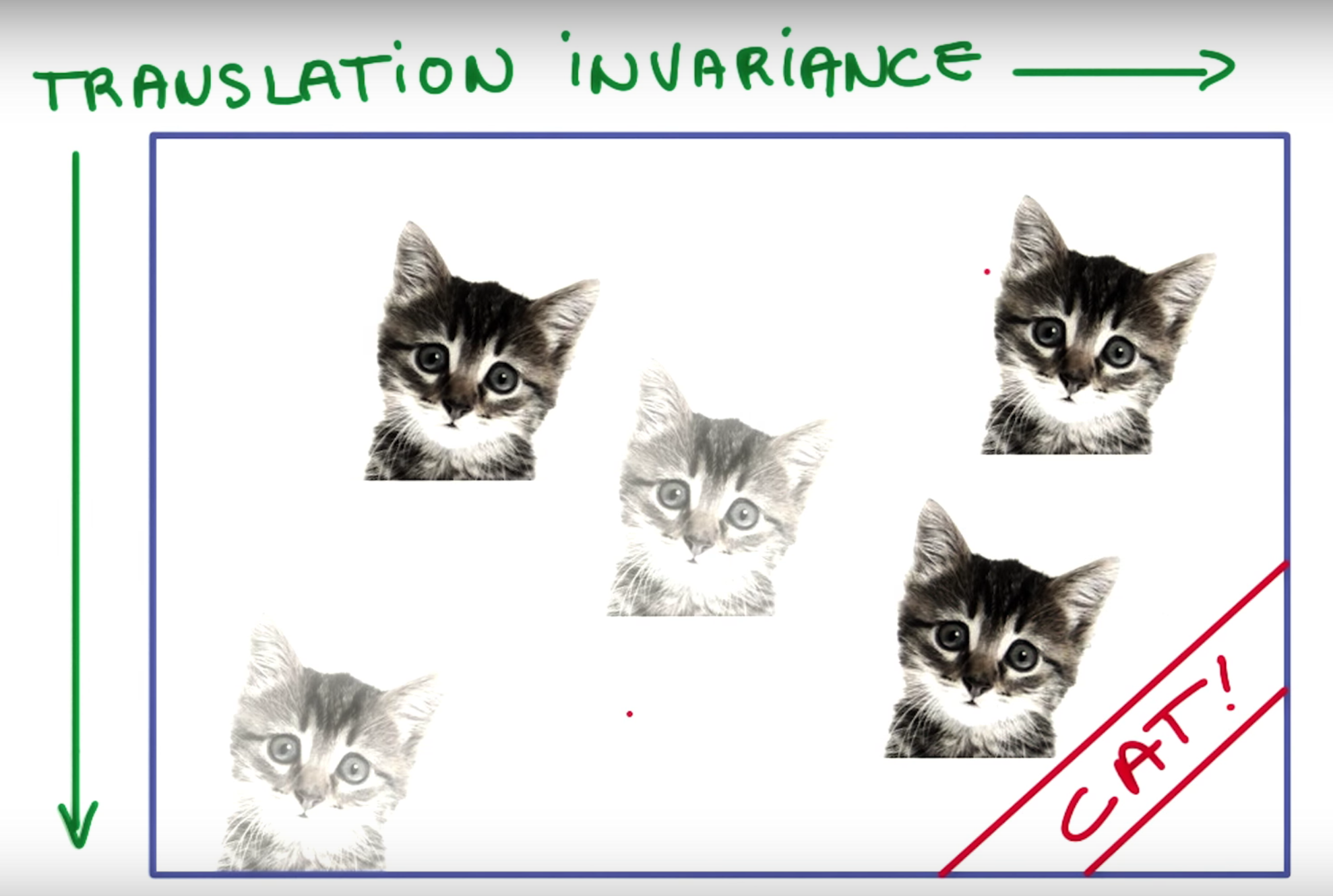

Translation Invariance

Pooling

// perform max pooling in 2x2 non-overlapping neighborhoods

// output will be 16x16 as stride (step width) is 2

{type:'pool', sx:2, stride:2}),

pooling layers in between provide translation invariance

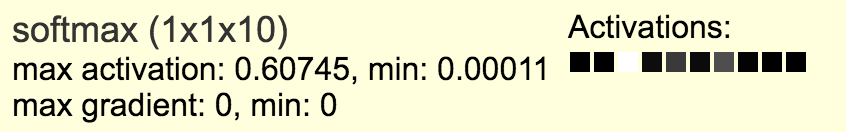

Softmax

{type:'softmax', num_classes:10}

assigns a probability to each of the 10 categories

Sample classification results

Wrap-Up

- setting up a NN is insanely easy

- preparing data and making results visible often is much harder

- the browser even makes Deep Learning more accessible

- all the fancy deep learning stuff works in the browser

- especially good for learning and education

- direct visualization and interactivity

Resources

- Tensorflow Playground in the Browser

- Essentials of Machine Learning Algorithms (with Python and R Codes)

- A visual introduction to machine learning

- Udacity 3 minute introduction to Neural Networks and Convolutional Networks

- Theoretical Motivations for Deep Learning

- Deep Learning for Robots

- Getting dirty with the math of Deep Learning

- Tensorflow: Google's Machine Learning Library

- Baidu Chief Scientist: Deep Learning changes the world

Bonus Resources: AlphaGo

Thank you!

Questions / Discussion

Bonus Material

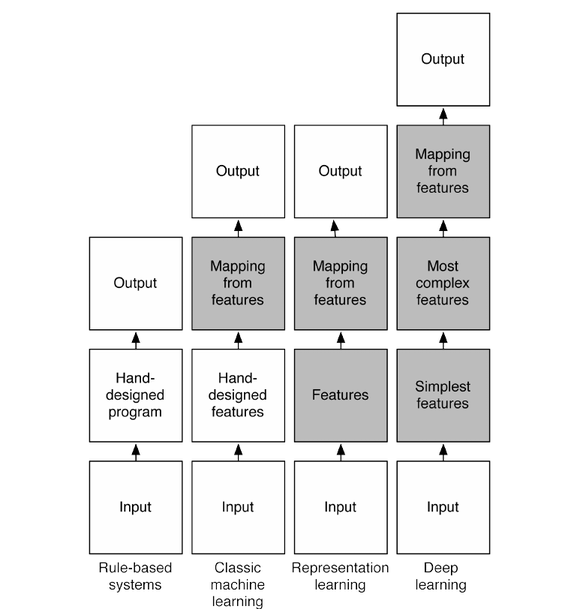

Parts that can learn from data

By AI strategy (gray)

http://rinuboney.github.io/2015/10/18/theoretical-motivations-deep-learning.html

Requirements on manual preparation of data

- Rule-based systems: AI systems hand-designed by experts, no learning

- Classic machine learning: features are hand-designed, most time is spent on designing the optimal features, generic classfier can learn

- Representation learning: important features are automatically discovered

- Deep learning: like representation learning, but multiple layers for powerful abstraction

Other JavaScript Libraries for Deep Neural Networks

-

Brain.js

- Unmaintained :(

- Still interesting to get ideas from

- Synaptic.js

- small general lib for Neural Network

- nice and simple API